Introduction

Earlier in software development, applications ran as single instance monoliths. They bundled the frontend (presentation), backend (business logic), database, and other integrations into one application. This integration provided some benefits: for example, simplicity of development and testing.

Deployment was just as simple as copying the packaged app into the server, and scaling could be done by running multiple instances of the app behind a load balancer. However, this posed its problems. For example, when the application becomes large, it will take a long time to deploy.

Another problem faced was the infrastructure where apps were deployed. Apps required a couple of dependencies for them to run successfully. This meant that the developer would have to install all the dependencies of the apps he/she developed.

We can see where this is going - the need for hardware that may only be necessary for one project. A change in the app requirements may also require one to purchase new equipment or hardware.

Thankfully, we had these solved with cloud computing, containerization, and container orchestration.

In this article, you learn briefly about containerization, container orchestration using Kubernetes, and how this simplifies continuous deployment, scaling, and high availability of deployed applications.

Containerization

We cannot talk about Kubernetes without talking about containerization first since Kubernetes is a container orchestration tool.

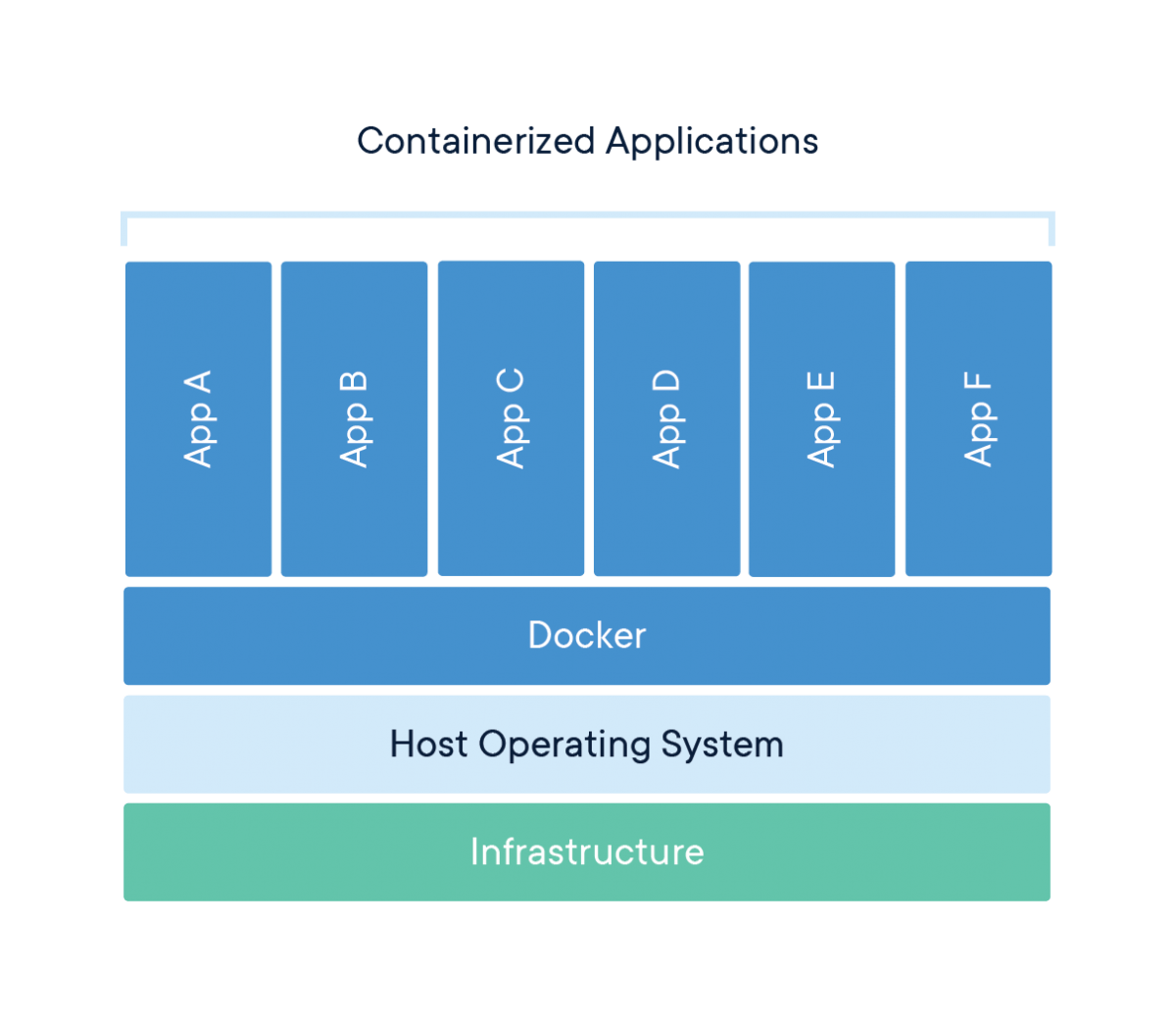

Containerization is the packaging application code into containers for deployment.

According to Docker , "A container is a standard unit of software that packages up code and all its dependencies, so the application runs quickly and reliably from one computing environment to another."

Containers operate using the Operating System level of virtualization. You can run multiple containers on the same operating system, sharing its kernel, but completely isolated.

What problems do containers solve?

Isolation of concerns: since containers ship with the application’s dependencies, you do not need to have these dependencies installed on your computer to run the app. It separates the infrastructure and its configurations from that of the code. It also makes it easy to deploy the application on any infrastructure without worrying about getting the dependencies installed. Dependencies conflict: containerization solves the problem of conflict between different dependencies on a host operating system. With containers, you can now maintain a consistent environment for development and production without having to do a lot of configurations. This reduces the margin for error and failure.

Kubernetes

So what is kubernetes? I use Kubernetes for managing the life cycle of containers. Google originally developed it, but the Cloud Native Computing Foundation currently manages it. There are other container orchestration tools such as Apache Mesos and Docker Swarm. Kubernetes is widely used and has become the de facto standard for managing cloud deployments. Why do I use Kubernetes, and what makes it so special? Open source: Kubernetes is free for everyone to use.

Ease of deployment: Kubernetes makes it very easy to deploy your application to cloud platforms and on-premise infrastructure. Because it uses containerization, all you have to do is specify your image and any additional spec you may need. For example, we can use the following line of code to deploy a simple application that runs Nginx with one replica:

kubectl run nginx --image=nginx --replicas=1

from the above command:

- kubectl is the command-line tool for executing Kubernetes commands.

- run nginx - specifies that it should create a deployment with the name nginx.

- --image=nginx: specifies that it should pull the image with the name nginx from its official docker repository

- --replicas=1: specifies that the deployment should have one pod. Easy right?

Horizontal scalability: Imagine you run an eCommerce business. During festive periods, the number of customers you have on your website increases, also increasing the pressure on your resources. To prevent downtime, you would have to either provision new resources - CPU, Memory, or increase the number of instances your application is running.

After the festive period, the pressure on your resources reduces. What will happen to those new resources? If you are using a cloud platform like GCP or AWS, you could just go and manually delete these resources. But then you would have to be monitoring the resources actively to know when the traffic drops and when it surges so that you respond accordingly to save cost.

A worst-case scenario is if you are hosting your service yourself, then you would have to purchase hardware that you may only need once a year. That's not good for your business.

With Kubernetes, you can scale up and scale down your application resources easily. The good news about this is that Kubernetes can do it automatically for you. Your Kubernetes controller will provide as many resources as are required to handle the pressure at a particular time and scale down when it reduces. This saves you cost, time and reduces the margin for error.

Self-healing: Containers running your applications can sometimes fail because of errors. Kubernetes can kill pods with the failing containers and provision new ones to replace them without your interference.

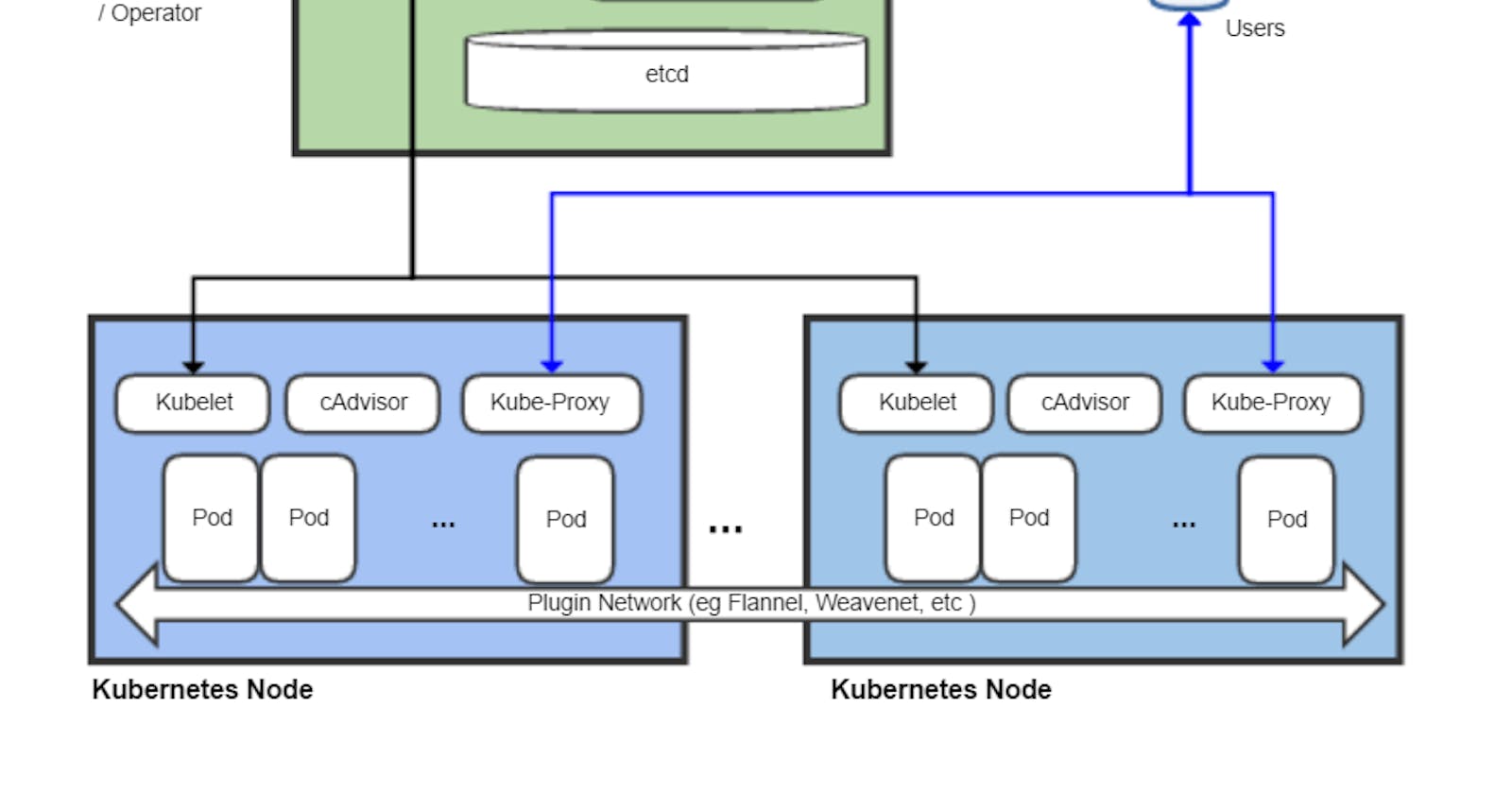

Zero downtime: Kubernetes uses pods to run the containers that host the applications. A replicaset and deployment manage these pods. When a deployment is created, it creates a replicaset. The replicaset keeps a count of the number of pods in the running state. This ensures that at every time in the lifetime of your application, it runs the correct number of pods you have specified.

When you create a new deployment, it creates a new replicaset besides the previous one. Kubernetes can deploy new pods from the new replicaset without killing the previous pods. After it has been certified, the new pods are ready. Based on the health-checks specified, the old pods will then be killed, and it will deploy the remaining pods from the new replicaset. This ensures that there is zero downtime during the deployment process.

Rollouts and rollbacks: Kubernetes uses rollouts and rollbacks to ensure that you can quickly revert changes made to your deployment configuration. Assuming you deployed a new version of your app, and you realize that the recent version of the app is faulty, you can quickly roll back to a previous working version using rollbacks.

Storage orchestration: With Kubernetes, you can easily provision storage for your running applications.

Version control: Because the state of your cluster and deployments are defined using code(YAML), you can manage this code using a version control system, save them in a centralized repository. These will help keep track of the changes in the infrastructure and application deployment configuration and aid collaboration in a team.

Large community and support: Because Kubernetes is open-source , it has a vast user base and community. Because of these, it is continuously being improved. There are also lots of materials you can use for learning.

Service discovery: Pods are the smallest units of deployment in Kubernetes. Pods are temporary and can get killed anytime. Kubernetes has a native system for managing communication with these pods and load balancing traffic among them. It assigns each pod an IP address and a DNS name upon creation. It also groups the pods using labels that help the Kubernetes service identify the pods running specific applications and sends traffic to them.

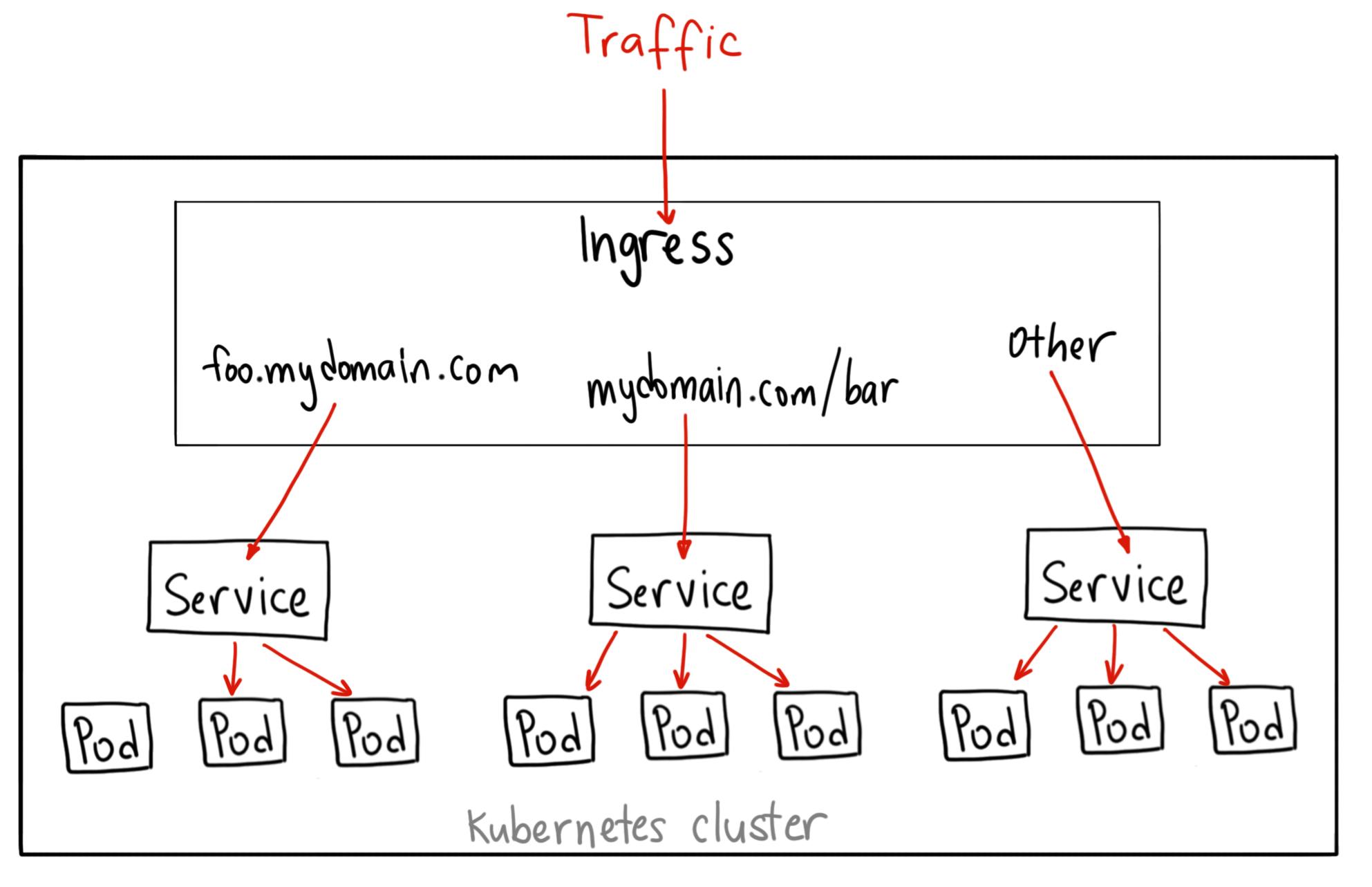

Load balancing: Kubernetes makes load balancing very easy. You can use a single load balancer to send traffic to different applications using an ingress controller and ingress rules, which will automatically send traffic to the appropriate pods based on the rules applied.

Resource management: When defining the specification for your applications, you can specify the resources that each container will consume. You can specify the amount of CPU and memory that each container will request and the limit of the resources it can take. This can help prevent your entire cluster from crashing when any of the applications is demanding too many resources.

Another reason I use Kubernetes is the fact that you can easily migrate your application from one cloud platform to the other with minimal stress. This is because the configuration for your deployment is declared in YAML files you can reuse anywhere.

Conclusion

In this article, we have discussed the features that Kubernetes offers that makes it such a valuable tool in the deployment process. I trust that now you have a better understanding of the value of Kubernetes and why you should use it in your deployment process.